To really move the needle on CSAT, SaaS teams need to get laser-focused on three things: delivering proactive support, creating personalised customer engagement, and genuinely closing the feedback loop. Nail these, and you’ll turn customer satisfaction from a passive number on a dashboard into a powerful engine for real, sustainable growth.

Why CSAT Is a Leading Indicator for Growth

It’s tempting to look at your Customer Satisfaction (CSAT) score as just another KPI to track. But for a growing SaaS business, it’s much more than that—it’s a crystal ball for your future success. Think of it less like a report card on past performance and more like a forecast of your company’s health.

High CSAT scores aren’t just vanity metrics; they’re directly tied to the business outcomes every founder obsesses over.

When your customers are consistently happy, they’re far less likely to churn. This isn’t just a hunch. We’ve seen how even a tiny 1% shift in customer satisfaction can correlate with a significant jump in customer loyalty. And loyal customers don’t just stick around—they become your most valuable asset.

The Direct Link to Revenue and Retention

Happy customers are the lifeblood of any healthy subscription model. Their satisfaction fuels growth in a few critical ways:

- Lower Customer Churn: Satisfied users see the value in your product day in and day out, which means they have little reason to shop around for alternatives. A mere 5% increase in customer retention can boost profits by over 25%.

- Increased Lifetime Value (LTV): When customers stay longer, their LTV naturally goes up. They’re also much more open to upgrading their plan or adding new features, which directly pumps up your revenue.

- Powerful Brand Advocacy: Delighted customers become your best (and cheapest) marketing channel. They rave about your product to their friends and colleagues, driving a steady stream of high-quality organic leads through word-of-mouth.

The way customers feel about your business is exactly what they’ll tell others. Those individual stories, when multiplied, become your brand’s reputation.

Before we dive into specific tactics, it’s crucial to understand the foundational strategies that make a CSAT improvement plan work. The table below summarises the three core pillars we’ll build upon throughout this playbook.

Core Pillars for Improving CSAT in SaaS

| Pillar | Key Objective | Example Tactic |

|---|---|---|

| Proactive Support | Solve problems before they happen by anticipating user needs and friction points. | Creating in-app guides for complex features that users often struggle with. |

| Personalised Engagement | Make customers feel seen and valued, not like another number in a queue. | Sending targeted onboarding emails based on a user’s specific goals or role. |

| Closing the Feedback Loop | Show customers their feedback matters by acting on it and communicating changes. | Following up with users who reported a bug to let them know it’s been fixed. |

Ultimately, learning how to improve CSAT isn’t about chasing a score—it’s about building a customer-obsessed culture from the ground up.

Throughout this playbook, we’ll unpack a framework built on these three strategies. We’re moving beyond simply collecting data to truly understanding what it means and, more importantly, acting on it. For a deeper dive, you can learn more about unlocking growth with feedback from users in our detailed guide.

Mastering these pillars is the essential first step. Once you have this foundation in place, you can start implementing the specific tactics that drive real, measurable change and turn satisfied users into lifelong advocates for your brand.

Building a Smarter CSAT Measurement Framework

If you really want to improve customer satisfaction, the first thing you need to do is stop treating it like a single, monolithic number. A high overall score can easily mask pockets of deep frustration within your user base, giving you a false sense of security. The real trick is to move away from generic, once-a-year surveys and build a strategic framework that asks the right questions at the moments that actually matter.

This isn’t just about measuring satisfaction; it’s about diagnosing it. A smart framework turns CSAT from a vanity metric into a powerful diagnostic tool, pinpointing friction points and uncovering opportunities you never knew you had.

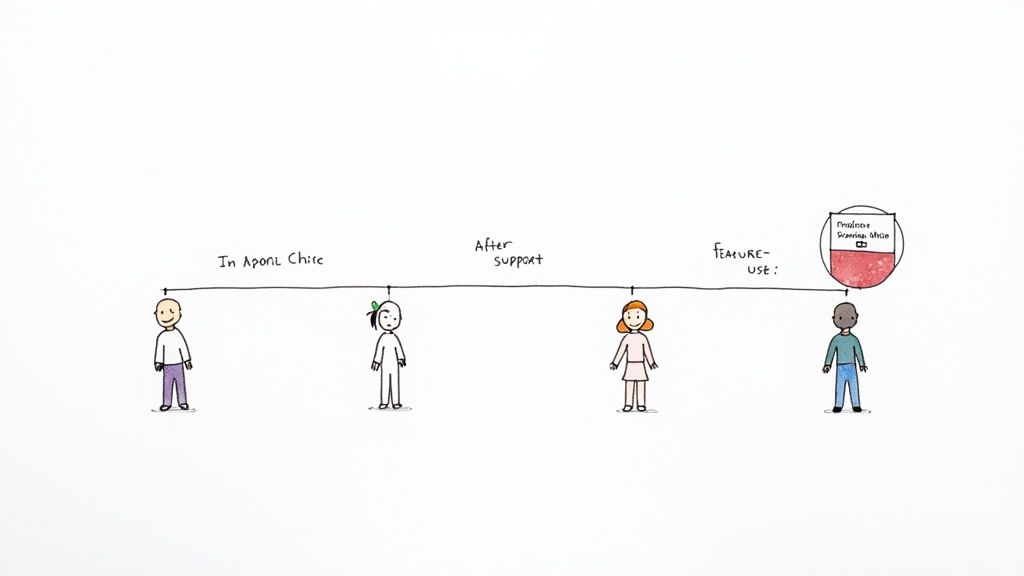

This image gives you a simplified look at a customer journey, showing some of the key moments where feedback is most valuable.

Mapping your own journey in a similar way helps you spot the perfect moments to pop the question, ensuring the responses you get are both timely and ridiculously relevant.

Identify Critical Customer Touchpoints

Instead of a scattergun approach, take the time to map out the make-or-break moments in your customer journey. These are the interactions where a user’s perception of your product is formed, reinforced, or completely shattered. Getting this right is the foundation of any decent strategy for improving CSAT.

Think about deploying surveys at these high-impact moments:

- Post-Onboarding: Just after a user finishes their initial setup or checklist.

- After First Major Feature Use: The moment they’ve achieved their first “aha!” with your product.

- Post-Support Interaction: Immediately after a support ticket has been marked as resolved.

- After an Upgrade or Downgrade: To get the raw truth behind their decision.

This targeted approach gives you contextual feedback you can actually act on. A low score after onboarding points to a completely different problem than a low score after a chat with support.

Choose the Right Survey Channel

How you ask for feedback is just as important as when you ask. Every channel has its own vibe, and picking the right one can dramatically boost your response rates and the quality of the feedback you receive.

- In-App Pop-ups: These are brilliant for immediate, contextual feedback tied to a specific feature or workflow. They grab attention but can feel intrusive if you overdo it.

- Email Surveys: Perfect for relationship-based feedback or post-support follow-ups. They give customers the space to write more detailed responses without yanking them out of their workflow.

The goal is to make giving feedback feel like a natural part of the user experience, not a chore. The less friction you create, the more data you’ll collect.

It’s clear that customer experience is becoming a top priority everywhere. Take Southeast Asia, where improving customer satisfaction has become a core business strategy. The 2025 State of Customer Experience in Indonesia report found that 72% of organisations now see customer experience as a high priority—a massive jump from just 24% the year before. This shift just goes to show how much value companies are placing on skilled leadership and solid measurement frameworks. You can discover more insights about the state of CX in the region and see how seriously the top players are taking this.

Ask Smarter Questions

It’s time to move beyond the bland “Are you satisfied?” question. To get insights you can actually use, you need to dig a bit deeper. A golden rule is to always pair your rating question with an open-ended follow-up.

Here are a few examples to get you started:

- For post-onboarding: “How easy or difficult was it to get started with our product today?”

- For a specific feature: “What is one thing we could do to make this feature better for you?”

- For support interactions: “Did our support agent fully resolve your issue today?”

These kinds of questions give you both the quantitative data (the score) and the qualitative context (the why). And let’s be honest, the context is where the real gold is hidden.

Segment Your Feedback for Deeper Insights

Finally, here’s where the magic really happens: segmenting your feedback. An aggregate score tells you very little on its own. The real story emerges when you start slicing the data by different user groups.

Try analysing your CSAT scores based on:

- Subscription Tier: Are your enterprise customers miles happier than those on your free plan? This could point to a value perception issue.

- User Persona: Do developers love your API docs while project managers find the UI a nightmare? This is your roadmap prioritisation, served on a silver platter.

- Customer Lifecycle Stage: Are new users over the moon but long-term customers getting grumpy? This might be a sign your product isn’t evolving with their needs.

Segmenting your data transforms it from a simple score into a detailed map showing you exactly where satisfaction is thriving and where it’s falling apart. This is absolutely critical for making informed decisions. For anyone looking to understand how this metric fits into the bigger picture, our guide on the differences and uses of CSAT and NPS offers some extra context.

By building a thoughtful framework like this, you’re setting the stage for targeted, effective improvements that actually move the needle.

How to Prioritize High-Impact CSAT Improvements

So, you’ve collected a mountain of customer feedback. That’s a great start, but it can get overwhelming—fast. You’re staring at a chaotic mix of brilliant ideas, minor annoyances, and outright bug reports, all screaming for your attention. The real challenge isn’t just listening; it’s figuring out what to act on first.

Drowning in suggestions is a classic problem, even for the most customer-obsessed SaaS teams. Without a solid system, you risk burning valuable engineering and support time on low-impact tweaks while the major satisfaction-killers slip right under the radar. The trick is to turn that raw feedback into a structured, actionable roadmap.

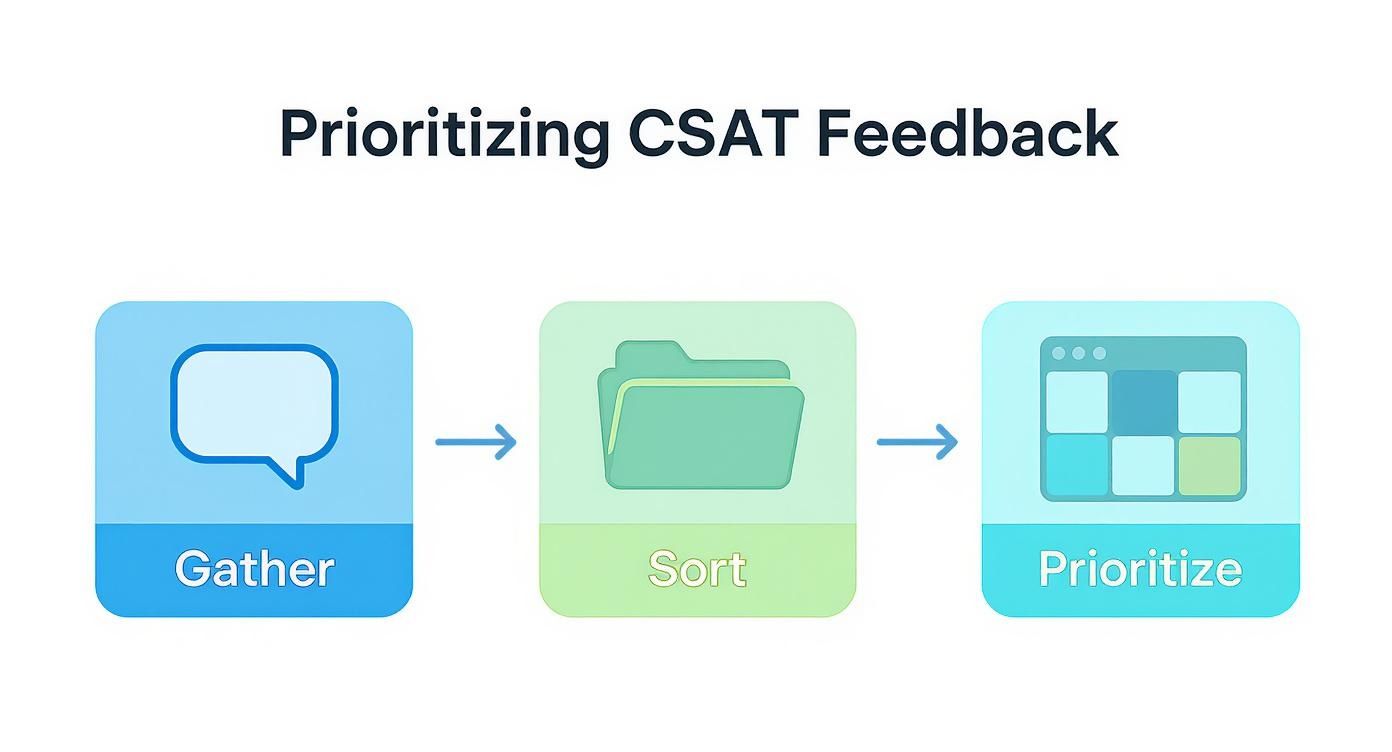

From Raw Feedback to Actionable Themes

First things first: stop looking at feedback as one long, intimidating to-do list. Your initial goal is to group individual comments into broader, strategic themes. This is how you turn chaotic noise into clear signals about your customer experience.

Start sorting every comment, ticket, and survey response into buckets. Pretty soon, you’ll see distinct patterns emerge.

- Product Gaps: These are the missing features or capabilities customers keep asking for. They’re usually tied to a specific job someone is trying to do but can’t.

- Usability Friction: This isn’t about what your product can’t do, but what it makes a pain to do. Think confusing navigation, too many clicks for a simple task, or just a clunky UI.

- Support Quality: Feedback here is all about interactions with your team. It could be slow response times, unresolved issues, or a support agent who just didn’t seem to get it.

- Onboarding Confusion: These comments are red flags that new users are struggling to find that all-important “aha!” moment. A confusing setup or unclear instructions can kill satisfaction before a customer even gets going.

By organising feedback this way, you shift from reactively stamping out individual fires to strategically addressing the root causes of frustration. It’s a fundamental step toward making any meaningful progress.

Using the Impact vs. Effort Matrix

Once you have your themes, the next question is obvious: where do you begin? Not all fixes are created equal. This is where a simple but incredibly powerful tool—the impact vs. effort matrix—becomes your best friend.

The concept is beautifully straightforward. For each potential improvement, you plot it on a grid with two axes:

- Customer Impact: How much will this change actually improve our users’ experience and, hopefully, our CSAT score?

- Team Effort: How much time, money, and developer sanity will it take to get this done?

This little exercise forces you to visually map out your priorities and think critically about the return on investment for each action. It’s how you separate the easy wins from the resource-draining black holes.

Prioritisation isn’t just about what you decide to do; it’s about what you consciously decide not to do right now. The matrix gives you a defensible framework for making those tough calls.

Your goal is to zero in on the initiatives that land in the “High Impact, Low Effort” quadrant. These are your quick wins—the changes that will deliver the biggest bang for your buck with the least amount of internal strain. Fixing a confusing error message, for example, might be a low-effort task that has a massive impact on user frustration.

Applying the Framework to Real Scenarios

Let’s see how this plays out with our feedback themes. Imagine you’ve identified “Onboarding Confusion” as a major source of low CSAT scores. You’ve got a few potential solutions on the table.

You could:

- A) Rewrite a few confusing tooltips: This is a low-effort job that could have a moderate impact on new user clarity.

- B) Create a short, in-app guided tour for the main feature: A medium-effort project, but with potentially high impact.

- C) Completely redesign the entire onboarding flow: This is a massive, high-effort, high-impact project that will likely consume a full quarter.

Using the matrix, you’d probably tackle (A) right away for a quick win. Next, you’d start scoping out (B) as your next priority, while putting the behemoth (C) on the longer-term roadmap. Getting onboarding right is a cornerstone of success, so as you plan, you might want to review some customer onboarding best practices for inspiration.

Let’s try another theme: “Support Quality.” Customers are complaining about slow response times.

- Quick Win (Low Effort, High Impact): Create saved replies for the top five most common questions to help agents respond faster.

- Major Project (High Effort, High Impact): Implement a brand-new support ticketing system with better automation.

By knocking out the quick win first, you can start improving the customer experience immediately while you plan the larger, more complex project. This approach builds momentum and, more importantly, shows your customers you’re listening—a surefire way to boost CSAT in both the short and long term.

Automating Your Customer Feedback Loop

Trying to manually track every piece of customer feedback as you grow is a surefire way to let things slip through the cracks. A frustrated comment buried in a support ticket or a low survey score can easily get lost, leaving a customer feeling like they’re shouting into the void. The secret to avoiding this mess? Building an automated system that turns feedback into immediate, tangible action.

This isn’t about replacing the human touch; it’s about amplifying it. Good automation makes sure the right information lands in front of the right people at the right time. It frees up your team to focus on actually solving problems instead of just shuffling data around. This is how you start making feedback truly actionable, in real-time.

Designing Your Instant-Action Workflow

Let’s walk through a real-world scenario. A customer gives you a low CSAT score—say, a 2 out of 5—in a feedback tool like HappyPanda. In a manual world, that score might sit in a spreadsheet until someone gets around to it next week. In an automated one, a chain reaction kicks off instantly.

- The low score automatically creates a new, high-priority ticket in a project management tool like Linear, assigned directly to the product team.

- At the same time, a notification pings a dedicated Slack channel, alerting the customer success manager who owns that account.

- The customer immediately gets a personalised, automated email. It acknowledges their feedback and lets them know a real person is on the case.

This simple workflow flips a moment of friction into an opportunity to seriously impress someone. It shows you’re not just collecting scores for a report—you’re listening and reacting with genuine urgency. The whole process becomes a well-oiled machine.

This flow chart breaks down the basic process of turning raw feedback into prioritised actions.

As you can see, effective feedback management isn’t a chaotic scramble. It’s a structured process that moves logically from collection to decisive action.

The Power of Integration and CRM

Connecting your tools is the backbone of any decent feedback loop. Modern CRM systems are non-negotiable here, especially in Southeast Asia, where their link to customer satisfaction is undeniable. A recent report, for example, highlighted that Indonesian SMEs see sales uplifts of up to 30% within a year of adopting a CRM. This proves how a central hub for customer data fuels better, more personalised interactions that directly boost satisfaction.

When you integrate your feedback tool with your CRM, your team can see a customer’s entire history right alongside their latest comments. That context is pure gold for your support and success teams.

An automated feedback loop does more than save time. It builds a company culture where every team—from product to marketing—is constantly connected to the voice of the customer.

Crafting Automated Yet Personal Follow-Ups

Just because it’s automated doesn’t mean it has to feel robotic. The real goal is to scale personalised communication, not kill it. You can set up simple rules to trigger different follow-up emails based on the CSAT score a customer leaves.

- Positive Score (4-5): Trigger an email that thanks them for the feedback. This is also the perfect, low-friction moment to ask for a public review or a testimonial.

- Neutral Score (3): Send a message asking for more detail. Something as simple as, “Thanks for your feedback! What’s one thing we could do to make your experience a ‘5’ next time?” can uncover some absolute gems.

- Negative Score (1-2): This needs an immediate, empathetic response that acknowledges their frustration and clearly sets expectations for a follow-up from your team.

This segmented approach shows customers you’re actually paying attention to their specific experience. It proves their voice matters, which is a massive driver of loyalty and a critical part of any strategy for how to improve CSAT.

Ultimately, closing the feedback loop is about making a promise to your customers: their input won’t just disappear into a black hole. For a deeper dive, check out our guide on the art of closing the feedback loop effectively. By building an automated, integrated system, you ensure every piece of feedback is heard, routed, and acted upon, turning potential detractors into your most passionate fans.

Running Experiments to Validate CSAT Initiatives

Making big changes based on a gut feeling is a pretty risky way to steer your product. When it comes to something as vital as customer satisfaction, you need more than just a hunch—you need cold, hard proof. The best way to figure out how to improve CSAT is to put on your lab coat and treat every potential improvement like a structured experiment.

This approach pulls you out of a reactive ‘firefighting’ mode and into a continuous cycle of learning and validation. Instead of just pushing changes live and crossing your fingers, you’ll be able to prove that your initiatives are actually making a positive difference to your users’ experience. It’s all about making data-driven decisions, one test at a time.

First Things First: Form a Clear Hypothesis

Every decent experiment starts with a clear, testable hypothesis. This is just a simple statement declaring what you believe the outcome of your change will be. A strong hypothesis isn’t some vague guess; it’s a specific prediction that connects an action to a measurable result.

Let’s say you’ve noticed from your HappyPanda feedback that users are getting tripped up on a particularly complex feature page. You believe that offering a bit of help before they have to ask for it could boost their confidence and, in turn, their satisfaction.

Your hypothesis might look something like this:

By implementing a proactive chat prompt on the ‘Advanced Reporting’ page, we will reduce user friction and increase the CSAT score for that specific feature by 10% within 30 days.

This statement is powerful because it’s specific. It nails down the action (proactive chat), the target audience (users on a specific page), the metric (CSAT score), and a measurable goal (a 10% increase). That kind of clarity is absolutely essential for a successful experiment.

Designing and Running a Clean A/B Test

With your hypothesis in hand, the next move is to design a clean A/B test. This just means splitting your users into two groups to compare their experiences directly. A well-designed test makes sure that the results you see are genuinely caused by your change, not just random chance.

Here’s how you could set up the experiment for our proactive chat prompt:

- Control Group (Group A): These users will see the ‘Advanced Reporting’ page exactly as it is right now. No changes. This is your baseline.

- Variant Group (Group B): These users will see the same page, but with the new proactive chat prompt popping up after they’ve been on the page for, say, 20 seconds.

The key here is to keep everything else the same for both groups. The only difference should be the chat prompt. This isolation is what lets you confidently attribute any change in CSAT directly to your intervention. You’d then run the test for a set period—long enough to get a statistically significant amount of data—and survey both groups about their experience with that specific feature.

Measuring the Outcome and Deciding What’s Next

Once the experiment is over, it’s time to dig into the results. Did the CSAT score for Group B (the variant) actually go up? And if it did, did it hit your predicted 10% target? This is the moment of truth where you either validate or invalidate your initial hypothesis.

You’ll land on one of three possible outcomes, each with a clear path forward:

- The Hypothesis Was Correct: Brilliant! The data shows your proactive chat prompt made a real difference. Time to roll this feature out to 100% of your users and chalk up a win.

- The Result Was Inconclusive: Maybe there was a tiny lift in CSAT, but not enough to be statistically significant. This isn’t a failure; it’s a learning opportunity. Perhaps the prompt’s timing was off, or the message wasn’t quite right. You can form a new hypothesis and run another iteration.

- The Hypothesis Was Wrong: The change had no effect, or worse, it actually lowered satisfaction. This is also a valuable outcome! You’ve just saved yourself from rolling out a feature that doesn’t help your customers. Now you can confidently scrap that idea and focus your energy elsewhere.

By treating your CSAT initiatives as experiments, you build a solid, evidence-based process for improvement. Every test, win or lose, provides valuable insights that sharpen your understanding of what your customers truly want. This methodical approach is how you make consistent, measurable progress and turn customer satisfaction into a predictable driver for growth.

Meeting Rising Customer Expectations for Quality

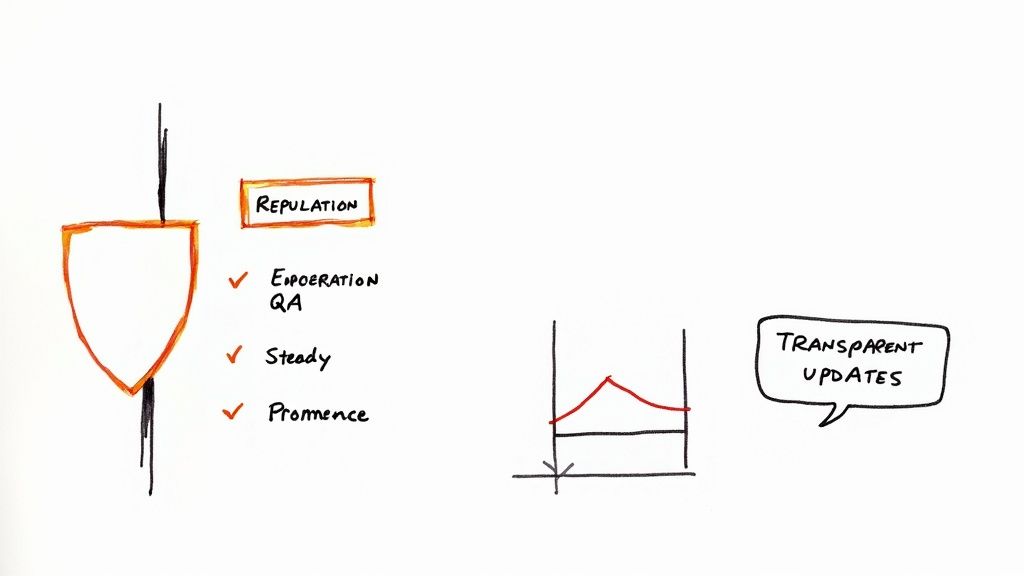

In a packed SaaS market, just having a product that works is the bare minimum. It’s the price of entry. To actually stand out and see those CSAT scores climb, you need to build a reputation for undeniable quality. Today’s customers aren’t just buying a tool; they’re investing in a solution they can count on, and their expectations have never been higher.

This is especially true in fast-growing regions like Southeast Asia. Consumer expectations there have skyrocketed, with a brand’s reputation now a massive factor in their decisions. A 2025 study from Bluebell Group revealed that 91% of Southeast Asian consumers now see reputation as important, a huge leap from just 71% in 2021.

Even more telling? 83% are willing to pay more for proven quality. This shows that reliability isn’t just a feature—it’s a premium feature people will happily pay for. You can discover more insights about these consumer trends and see just how deep this shift goes.

The takeaway is simple: quality can’t be an afterthought or a line item on a QA checklist. It needs to be a core pillar of your entire customer experience. It’s about building trust that your product will do what you promised, every single time.

Make Quality a Company-Wide Mission

Quality isn’t just the engineering team’s job. It’s a mindset that should run through every single department, from product development all the way to customer support. When your whole organisation is obsessed with delivering a high-calibre experience, it becomes a powerful competitive advantage.

So, how do you make it happen?

- Invest in Robust QA: Go way beyond basic bug hunting. Think automated testing, regression testing, and user acceptance testing to catch issues long before they hit your customers.

- Prioritise Stability: When you’re planning your roadmap, give stability improvements and bug fixes the same weight as shiny new features. A reliable product is almost always more valuable than one that’s feature-packed but buggy.

- Empower Your Support Team: Give your support team the tools and knowledge to do more than just answer questions. They should be your front line for identifying and escalating quality issues effectively.

A strong brand reputation for quality acts as a shield during those inevitable service hiccups. When customers already trust you, they’re far more likely to be patient and understanding if something goes wrong, which helps protect your CSAT scores.

And if you want to truly meet those rising expectations, don’t forget to consider how web accessibility boosts user experience and sales for everyone. An accessible product is, by its very nature, a higher-quality product.

Practice Radical Transparency

Building a reputation for quality also means being honest and upfront, especially when things aren’t perfect. Hiding bugs or downplaying downtime will destroy trust faster than almost anything else.

Instead, make transparency a core part of your strategy.

For instance, if you’re deploying an update that might cause a temporary slowdown, tell people. Communicate it clearly and proactively. Use your in-app changelog or a quick email announcement to let users know what’s happening and why.

And when a bug is found, don’t just fix it quietly in the background. Acknowledge it publicly, explain the impact, and give a timeline for the solution. That level of transparency shows you respect your customers’ time and reinforces that you’re a reliable partner committed to quality—a cornerstone of any strategy to improve CSAT.

Got Questions About CSAT? We’ve Got Answers.

When you start digging into customer satisfaction, a few questions always pop up. It’s only natural. As you get this playbook rolling, you’ll probably find yourself wondering about benchmarks, best practices, and how all these different metrics actually play nice together.

So, let’s clear the air. Here are some quick, no-fluff answers to the questions we hear most often from SaaS leaders.

What’s a “Good” CSAT Score Anyway?

Everyone wants to know the magic number. While it can shift a bit depending on your industry, a solid CSAT score for most SaaS companies is 80% or higher. Hitting that range is a good sign that most of your customers are leaving their interactions with your product and team feeling pretty good.

But here’s the thing: that absolute number isn’t the whole story. Not even close.

What’s far more important is the trend. Is your score moving up? A consistently improving score, even if it’s currently below that 80% mark, is a massive win. It tells you your efforts are paying off and you’re genuinely heading in the right direction.

How Often Should We Be Sending These Surveys?

This is a big one. The key is to avoid “survey fatigue”—that feeling your customers get when you bombard them with feedback requests until they just start ignoring you completely. The best approach is to be strategic and send surveys when the context is right.

Here’s a simple way to think about it:

- For specific interactions (Transactional): Did a customer just close a support ticket? Finish your onboarding flow? Send the survey immediately. You want to catch them while the experience is still fresh in their mind.

- For the big picture (Relationship): If you want to know how they feel about your product or brand overall, think quarterly or semi-annually. This gives you a regular pulse check without driving your customers crazy.

CSAT Is Great, But What Else Should We Track?

CSAT is a powerhouse, but it shouldn’t be your only metric. Relying on it alone is like trying to see a whole room by looking through a keyhole. To get the full 360-degree view of your customer experience, you need to pair it with a couple of other key players.

Think of CSAT, NPS, and CES as a three-legged stool. Each one measures a different, vital part of the customer journey. You need all three to get a stable, complete picture of customer health.

Here’s how they fit together to give you a much richer story:

- Net Promoter Score (NPS): This is all about long-term loyalty. It answers the question, “Are our customers happy enough to stick their necks out and recommend us?”

- Customer Effort Score (CES): This one is beautifully simple—it measures how easy you are to do business with. CES is brilliant at pinpointing friction and showing you exactly where customers are struggling.

Ready to turn customer feedback into your biggest growth driver? HappyPanda combines CSAT surveys, onboarding checklists, changelogs, and automated email sequences into one simple platform. Get started in 10 minutes at https://happypanda.ai.